Tokenization

Tokenization is the process of breaking down text into smaller units called tokens, which are the basic building blocks that language models use to understand and generate language. In the context of AI and large language models (LLMs), a token can be as short as one character or as long as one word, depending on the language and the tokenizer used.

Why Tokenization Matters

Language models like GPT-3, GPT-4, and others do not process raw text directly. Instead, they convert text into tokens, which are then mapped to numerical representations that the model can process. The number of tokens in a prompt or conversation determines how much information the model can consider at once (the context window).

How Tokenization Works

- Splitting Text: The tokenizer splits input text into tokens based on rules or learned patterns. For example, the word "tokenization" might be split into ["token", "ization"] or kept as a single token, depending on the tokenizer.

- Mapping to IDs: Each token is mapped to a unique integer ID, which is used as input to the model.

- Handling Special Characters: Tokenizers handle punctuation, spaces, and special characters in a way that maximizes efficiency and model performance.

Examples

| Text Input | Tokens Generated | Number of Tokens |

|---|---|---|

| Hello, world! | ["Hello", ",", "world", "!"] | 4 |

| Artificial Intelligence | ["Artificial", " Intelligence"] | 2 |

| GPT-4 is amazing. | ["GPT", "-", "4", " is", " amazing", "."] | 6 |

Tokenization and Model Limits

The context window of a model is measured in tokens, not characters or words. For example, if a model has a 4,096-token limit, it can process up to 4,096 tokens in a single prompt or conversation. Exceeding this limit means older tokens are dropped or ignored.

Practical Tips

- When preparing prompts for LLMs, be aware of token limits to avoid losing important context.

- Use online tools or libraries (like OpenAI's tiktoken) to estimate token counts for your text.

- Remember that tokenization can split words in unexpected ways, especially for rare or compound words.

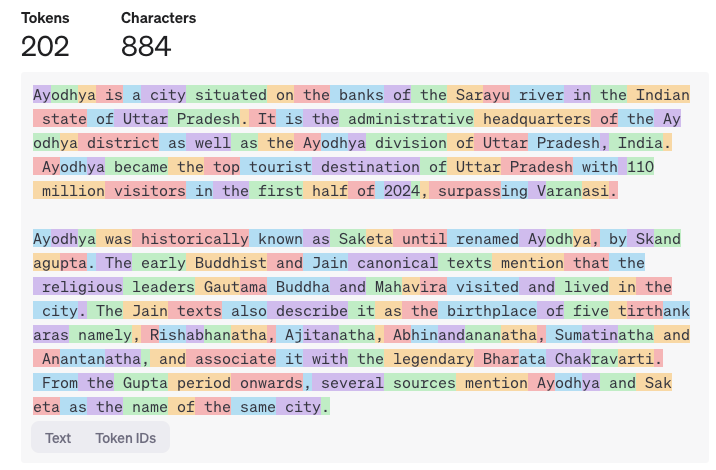

Tokens

Below image shows the tokens. Each token is shown in different colors.